Drupal is great for SEO

Lots of people complain about Drupal’s poor SEO - most platforms need at least some setup and Drupal is no different. If Drupal always has poor SEO, then you wouldn’t see half of Fortune 500 companies using it (as per drupal.org’s big stats)!

All standard SEO requirements are supported

Search around for “the most important SEO tricks/strategies/techniques” and you’ll generally get pretty much the same lists wherever you end up. It’s all about giving clean, well-structured and accessible content that makes sense to automated systems (like search engines), and at this point the things that affect that performance are fairly well-known.

There’s no excuse for dropping the ball for any of these crucial metadata and strategies - they come with out-of-the-box support:

✅ Page titles

Use templates to generate them automatically, then override when needed. Modules available to ensure uniqueness.

✅ Page descriptions

Automate page descriptions via templates, then override when needed

✅ Open Graph Metatags

Set automatic values and templates for important Open Graph metadata

✅ Descriptive, user-friendly URLs

Set automatic templates for your content URLs

✅ JSON+LD metadata

Populate common metadata with simple templates automatically, or override when needed

✅ XML Sitemap

Generate standard sitemaps to get your site crawled

✅ Alt text on images

Make it mandatory at the point of image upload, to ensure it’s never missing

✅ Robots.txt

Drupal robots.txt included out of the box, with handling for key admin/internal URLs. Easy to merge in your existing robots.txt, and there are modules available for editing via the UI.

#

# robots.txt

#

# This file is to prevent the crawling and indexing of certain parts

# of your site by web crawlers and spiders run by sites like Yahoo!

# and Google. By telling these "robots" where not to go on your site,

# you save bandwidth and server resources.

#

# This file will be ignored unless it is at the root of your host:

# Used: http://example.com/robots.txt

# Ignored: http://example.com/site/robots.txt

Ready for all your tools

There are community-supported modules at the ready to cover the basics for most setups. Plus, the Drupal CMS project looks likely to further improve the setup experience for a range of standard SEO tools.

Customisation

Standard templating and tokens will get you quite far, but some customisations to your metadata or setup will need custom code to power them. From a quick conversation we can usually give direction on how to get things done, and often get a change out same-day.

Set up custom events in GA4 or similar, to track your customer journeys and sales pipelines

Add JSON+LD and other metadata to provide additional information for search engines or other consumers of your site data

Push your sales data to Google, MailChimp, Adobe or your destination of choice, for deeper analysis

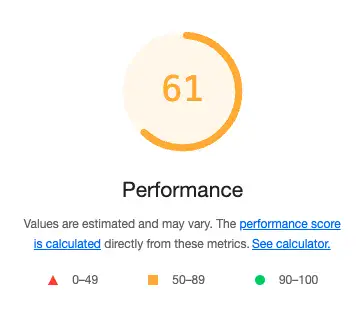

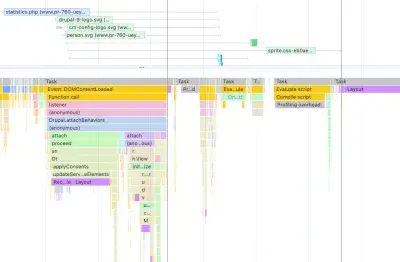

Site performance

It’s well known that the site’s performance, as measured by Core Web Vitals, also has a notable influence on your web ranking. If your site isn’t performing, there’s either something wrong or not enough priority has been given to the performance during development.

ComputerMinds are experienced at diagnosing and rectifying performance issues. Consider getting in touch for a Site Audit, where we can assess more than just your SEO and performance basics.